Vision SDK

Turn any connected camera into an AI-powered copilot: the Vision SDK is a modular platform for fleet management, ADAS, and augmented reality.

Augmented reality

Build heads up navigation with turn-by-turn navigation and custom objects

ADAS

Enable driver safety powered by real-time scene understanding with classification, detection, and segmentation

Live mapping

Visualize road features, signs, traffic lights and critical events on a customizable map

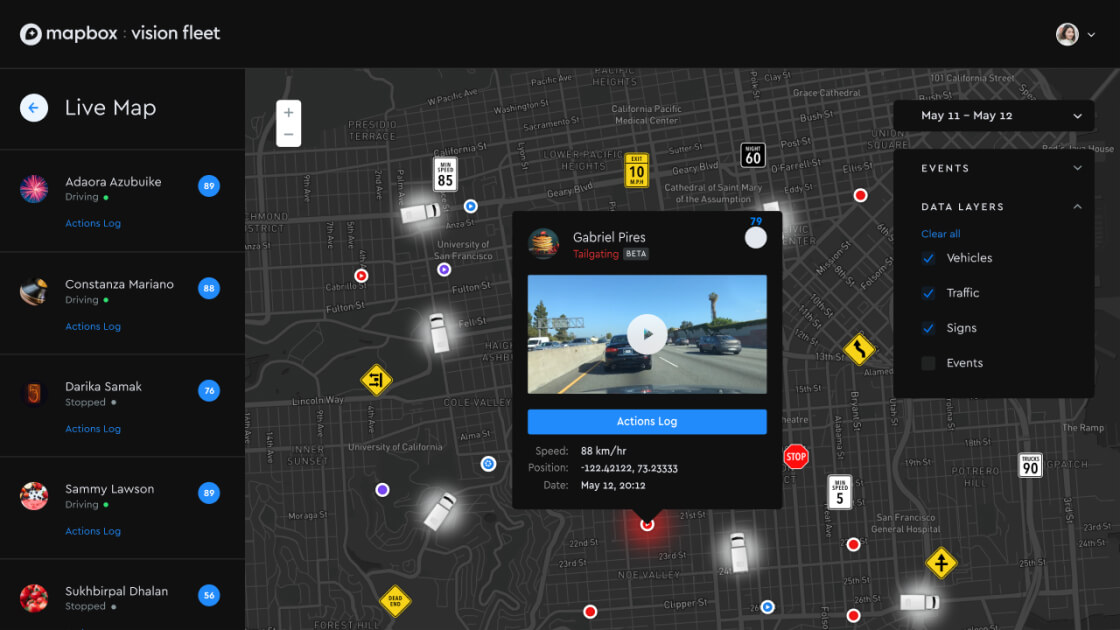

Alert drivers in real time

Integrate the Vision SDK into your dashcam or mobile app and send smart alerts when dangerous driving is detected. Create custom alerts for speeding, tailgating, rolling stops, and other risky driving behaviors.

Monitor fleets with dashcam integration

Connect in-vehicle cameras for a unified view of an entire fleet. Automatically detect and document risky driving behavior. Aggregate driving data for AI performance scores and incident reports with supporting images or videos.

Enable lane-level navigation

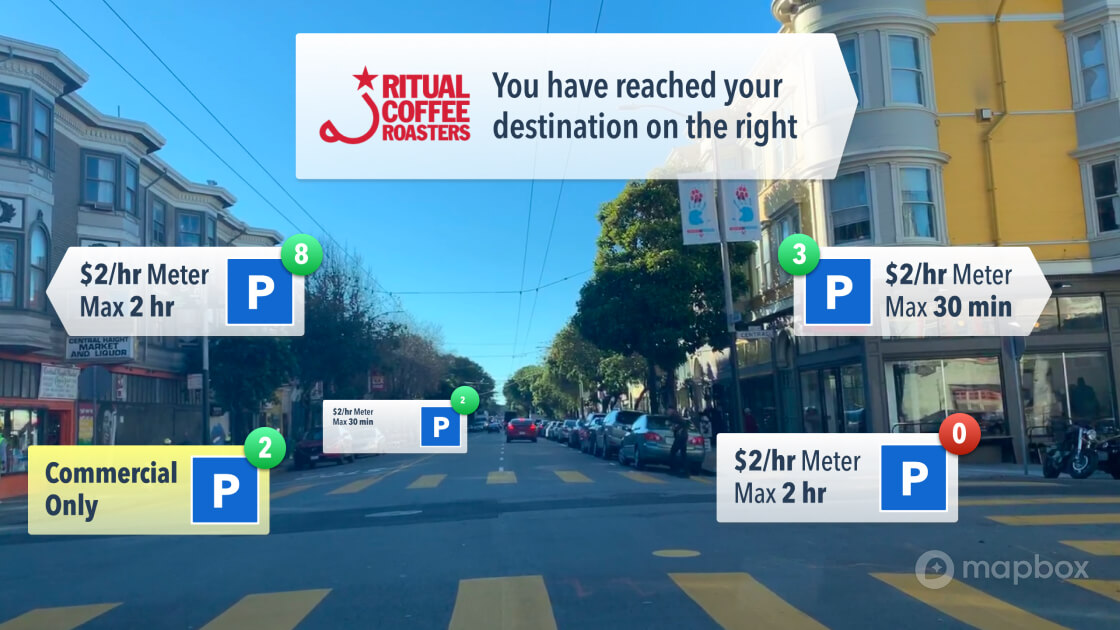

Send more contextual navigation instructions with augmented reality visual or audio cues. Use lane detection to alert drivers when a lane change is needed to complete a maneuver.

Add visual context to navigation

Build a feature-rich navigation experience with augmented reality. Overlay additional POI details, tips on parking, or maneuver indicators.

How it works

Mapbox Vision SDK runs computer vision algorithms optimized for today’s driving platforms, including automotive systems, dashcams, and mobile phones. Whether you are building an AR navigation experience, an application with ADAS features, or a fleet management tool, the Vision SDK’s modular architecture puts developers in the driver’s seat -- all you need to get started is a mapbox.com account.

Frequently Asked Questions

General

What is the Mapbox Vision SDK?

The Mapbox Vision SDK is a tool for developers that brings visual context to the navigation experience. It uses efficient neural networks to process imagery directly on mobile or embedded devices, turning any connected camera into a second set of eyes for your car. Situational context is interpreted with AI-powered image processing that is fast enough to run in real-time, yet efficient enough to run locally on today’s smartphones. Live interpretation of road conditions with connectivity to the rest of the Mapbox ecosystem gives drivers access to fresher, more granular driving information that can help make real-time recommendations, improving safety, user experience and efficiency for drivers.

What can I do with the Vision SDK?

The Vision SDK is a lightweight, multi-platform solution for live location, connecting augmented reality and artificial intelligence to a reimagined navigation experience. The Vision SDK brings the developer into the driver’s seat with a comprehensive set of real-time navigation and mapping features that fit into a single mobile or embedded application. In doing so, the Vision SDK enables customizable augmented reality navigation experiences, classification and display of regulatory and warning signs, driver alerts for nearby vehicles, cyclists, and pedestrians, and more.

How does the Vision SDK tie into the rest of Mapbox’s products and services?

Mapbox’s live location platform incorporates dozens of different data sources to power our maps. Map data originates from sensors as far away as satellites and as close up as street level imagery. Conventionally, collected imagery requires extensive processing before a map can be created or updated. The innovation of the Vision SDK is its ability to process live data with distributed sensors, keeping up with our rapidly changing world. Developers will be able to use this new ability to create richer, more immersive experiences with Mapbox maps, navigation, and search.

Developing with Vision

What are the components of the Vision SDK?

For each supported platform for Mapbox Vision, there are four modules: Vision, VisionAR, VisionSafety, and VisionCore:

- Vision is the primary SDK, needed for any application of Mapbox Vision. Its components enable camera configuration, display of classification, detection, and segmentation layers, lane feature extraction, and other interfaces. Vision accesses real-time inference running in VisionCore.

- VisionAR is an add-on module for Vision used to create customizable augmented reality experiences. It allows configuration of the user’s route visualization: lane material (shaders, textures), lane geometry, occlusion, custom objects, and more.

- VisionSafety is an add-on module for Vision used to create customizable alerts for speeding, nearby vehicles, cyclists, pedestrians, lane departures, and more.

- VisionCore is the core logic of the system, including all machine learning models. Importing Vision into your project automatically brings VisionCore along.

What is the minimum configuration for Mapbox Vision?

To work with only the foundational components of Vision (segmentation, detection, and classification layers), developers need only import the Vision SDK for their desired platform (iOS, Android, or Embedded Linux). Importing Vision SDK will automatically assemble the requisite VisionCore.

How do I set up AR Navigation?

Creating an augmented reality (AR) navigation experience requires the modules Vision, VisionAR, and Mapbox’s Navigation SDK.

What is the purpose of the VisionSafety Module?

Developers can create features to notify and alert drivers about road conditions and potential hazards with the VisionSafety SDK, an add-on module that uses segmentation, detection, and classification information passed from the Vision SDK. For example, developers can monitor speed limits and other critical signage using sign classification and track the most recently observed speed limit. When the detected speed of the vehicle is in excess of the last observed speed limit, programmable alerts can be set. VisionSafety can also send programmable alerts for nearby pedestrians, cyclists, and other vehicles.

What kind of lane information is exposed by Vision?

The Vision SDK's segmentation provides developers with the following pieces of lane information: number of lanes, lane widths, lane edge types, and directions of travel for each lane. A set of points describing each lane edge is also available.

Is there a way to use Vision with exogenous inputs (e.g. vehicle signals)?

Yes. We now support interfaces to arbitrary exogenous sensors (e.g. differential GPS, vehicle speed, vehicle IMU). This will be especially helpful for developers using embedded platforms.

What can’t I do with the Vision SDK?

A special license for the Vision SDK is required for developers that wish to save, download, or otherwise store any content, data, or other information generated by the Vision SDK. Contact sales to find out more. Vision SDK is meant to help you better navigate and manage fleets, not to create map databases. So please don’t use the Vision SDK for the purpose of creating a general database of locations, road features or any other map data. Full details on Vision SDK restrictions are posted in our Terms of Service.

Using Vision

In which regions is the Vision SDK supported?

Semantic segmentation, object detection, and following distance detection will work on virtually any road. The core functionality of the augmented reality navigation with turn-by-turn directions is supported globally. AR navigation with live traffic is supported in over 50 countries, covering all of North America, most of Europe, Japan, South Korea, and several other markets. Sign coverage is currently optimized for North America, Europe, and China, with basic support for common signs like speed limits in all regions. Sign classification for additional regions is under development.

Can the Vision SDK read all road signs?

The latest version of the Vision SDK recognizes over 200 of the most common road signs today, including speed limits, regulatory signs (merges, turn restrictions, no passing, etc.), warning signs (traffic signal ahead, bicycle crossing, narrow road, etc.), and many others. The Vision SDK does not read individual letters or words on signs, but rather learns to recognize each sign type holistically. As a result, it generally cannot interpret guide signs (e.g. “Mariposa St. Next Exit”). We’re exploring Optical Character Recognition (OCR) as a future release.

What’s the best way to test my Vision SDK project?

The best way to test the Vision SDK is to actually have your device pointed ahead at the road in a moving vehicle. However, there are some basic tests you can do without leaving your desk. Classification, detection, and segmentation layers can all be tested using pre-recorded driving footage from YouTube, Vimeo, or other video libraries. Developers can also use our data recorder and resimulation tools to play back previous driving footage (with device and IMU and GPS data) with different settings. See our documentation pages for more details.

What are the requirements for calibration?

AR navigation and Safety mode require calibration, which takes 20-30 seconds of normal driving. (Your device will not be able to calibrate without being mounted.) Because the Vision SDK is designed to work with an arbitrary mounting position, it needs this short period of calibration when it’s initialized to be able to accurately gauge the locations of other objects in the driving scene. Once calibration is complete, the Vision SDK will automatically adjust to vibrations and changes in orientation while driving.

What is the best way for users to mount their devices when using the Vision SDK?

Whether you are using an embedded or mobile device, the Vision SDK works best when the camera is mounted either to the windshield or the dashboard of your vehicle with a good view of the road. We’ve tested a lot of mounts; here are a few of our favorites:

Keep in mind when setting up a device mount that shorter length mounts will vibrate less. Mounting to your windshield or to the dashboard itself are both options. The Vision SDK will work best when the device is near/behind where your rear view mirror is, but please note your local jurisdiction’s limits on where mounts may be placed.

Can I use the Vision SDK with an external camera?

Yes, developers will be able to connect Vision-enabled devices to remote cameras. Image frames from external cameras can be transmitted over WiFi or via a wired connection.

Will the Vision SDK drain my battery?

The Vision SDK consumes CPU, GPU, and other resources to process road imagery on-the-fly. Just as with any other navigation or video application, we recommend having your device plugged in if you are going to use it for extended periods of time.

Will my device get hot if I run the Vision SDK for a long time?

Mobile and embedded devices will get warmer over time if they are exposed to direct sunlight, and as the onboard AI consumes a decent amount of compute resources. However, we have not run into any heat issues with moderate-to-heavy use.

Will the Vision SDK work in countries that drive on the left?

Yes.

Does the Vision SDK work at night?

The Vision SDK works best under good lighting conditions. However, it does function at night, depending on how well the road is illuminated. In cities with ample street lighting, for example, the Vision SDK still performs quite well.

Does the Vision SDK work in the rain and/or snow?

Yes. Just like human eyes, however, the Vision SDK works better the better it can see. Certain features, such as lane detection, will not work when the road is covered with snow.

Tech

What is “classification”?

In computer vision, classification is the process by which an algorithm identifies the presence of a feature in an image. For example, the Vision SDK classifies whether there are certain road signs in a given image.

What is “detection”?

In computer vision, detection is similar to classification - except instead of only identifying whether a given feature is present, a detection algorithm also identifies where in the image the feature occurred. For example, the Vision SDK detects vehicles in each image, and indicates where it sees them with bounding boxes. The Vision SDK supports the following detection classes: cars (or trucks), bicycles/motorcycles, pedestrians, traffic lights, and traffic signs.

What is “segmentation”?

In computer vision, segmentation is the process by which each pixel in an image is assigned to a different category, or “class”. For example, the Vision SDK analyzes each frame of road imagery and paints the pixels different colors corresponding its the underlying class. The Vision SDK supports the following segmentation classes: buildings, cars (or trucks), curbs, roads, non-drivable flat surfaces (such as sidewalks), single lane markings, double lane markings, other road markings (such as crosswalks), bicycles/motorcycles, pedestrians, sky, and unknown.

What is the difference between detection and segmentation?

Detection identifies discrete objects (e.g., individual vehicles) and draws bounding boxes around each one that is found. The number of detections in an image changes from one image to the next, depending on what appears. Segmentation, on the other hand, goes pixel-by-pixel and assigns each to a different category. For a given segmentation model, the same number of pixels are classified and colored in every image. Features from segmentation can be any shape describable by a 2-d pixel grid, while features from object detection are indicated with boxes defined by four pixels making up the corners.

Can I rely on the Vision SDK to make driving decisions?

No. The Vision SDK is designed to provide context to aid driving, but does not replace any part of the driving task. During beta, feature detection is still being tested, and may not detect all hazards.

Data and privacy

Do I need to use my data plan to use the Vision SDK?

In the standard configuration, the Vision SDK requires connectivity for initialization, road feature extraction, and augmented reality navigation (VisionAR). However, the neural networks used to run classification, detection, and segmentation all run on-device without needing cloud resources. For developers interested in running the Vision SDK offline, please contact us.

How much data does the Vision SDK use?

The Vision SDK beta uses a maximum of 30 MB of data per hour. For reference, this is less than half of what Apple Music uses on the lowest quality setting.

What type of road network data is Mapbox getting back from the Vision SDK?

The Vision SDK is sending back limited telemetry data from the device, including location data, road feature detections, and certain road imagery, at a data rate not to exceed 30 MB per hour. This data is being used to improve Mapbox products and services, including the Vision SDK. As with other Mapbox products and services, Mapbox only collects the limited personal information described in our privacy policy.